LinuxBoot 34c3

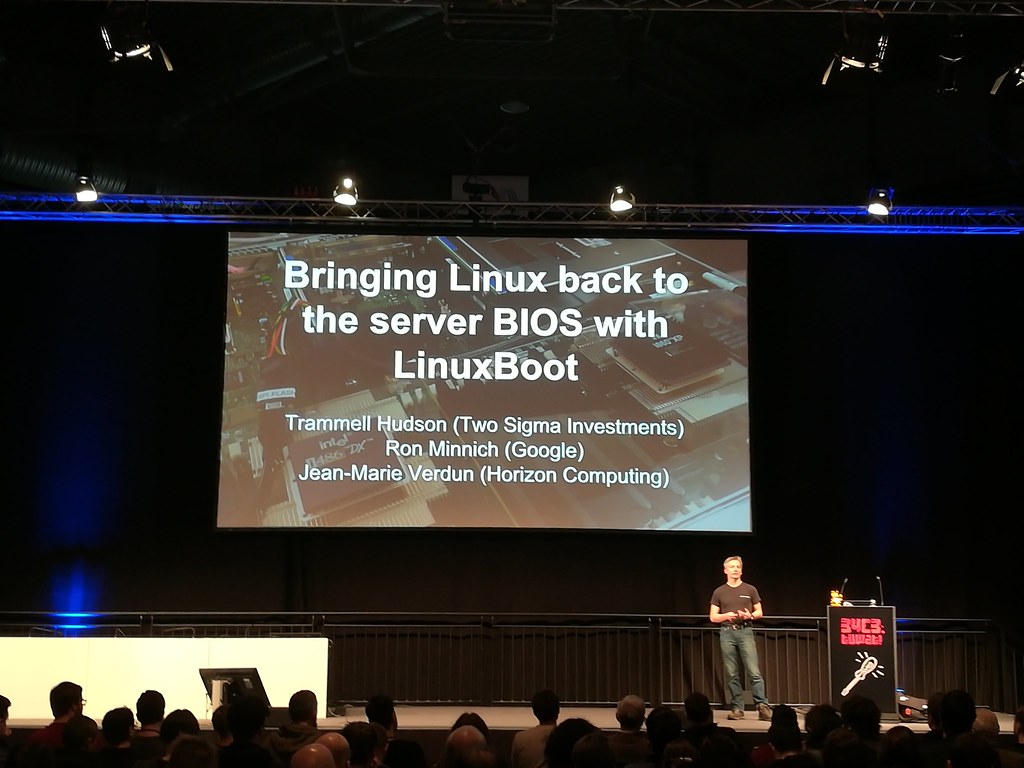

This is an annotated transcript of an overview talk that I gave at 34C3 (Leipzig 2017) entitled "Bringing Linux back to the server BIOS with LinuxBoot". If you prefer to watch, the 30 minute talk is on media.ccc.de.

This is an annotated transcript of an overview talk that I gave at 34C3 (Leipzig 2017) entitled "Bringing Linux back to the server BIOS with LinuxBoot". If you prefer to watch, the 30 minute talk is on media.ccc.de.

Overview

For the past few years I’ve been researching firmware security and have found numerous boot-time vulnerabilities like Thunderstrike and Thunderstrike 2. I’m glad that I’m not here to talk about yet another vulnerability of the week, but instead to present a better way to build more secure, more flexible and more resilient servers by replacing the closed-source, proprietary vendor firmware with Linux.

This is not a new idea: LinuxBoot is a return to the old idea behind LinuxBIOS, which was a project started by my collaborator Ron Minnich back in the 90s. His group at Los Alamos built the third fastest supercomputer in the world from a cluster using LinuxBIOS to improve reliability.

LinuxBIOS turned into coreboot in the early 2000s and, due to shrinking flash sizes, had the Linux part removed and became a more generic board initialization tool that was capable of booting other payloads. It now powers chromebooks and other laptop projects like my slightly more secure Heads firmware, but doesn't support any modern server boards.

Most servers today use a variant of Intel’s Unified Extensible Firmware Interface, which replaced the original 16-bit BIOS. UEFI has a complex, multi-phase boot process and It seems like a requirement that every talk on firmware must include this slide showing these various phases.

The first SEC or security phase establishes a root of trust and verifies the pre-EFI initialization phase. Execution then jumps into PEI, which initializes the the memory controllers, the CPU interconnects, and a few other critical low-level things. When PEI is done the system is in a modern configuration: long mode, paging and ready for C. It then jumps into the Device Execution Environment, DXE pronounced like "Dixie", which enumerates the various buses, initializes the other devices in the system and executes their Option ROMS. Once all the devices have been identified and initialized, control transfers to the boot device selection phase, BDS, which looks at NVRAM variables to determine from which disk, USB or network device to load the OS bootloader. The final OS bootloader is executed from that device, which then does what ever it does to finally jump into the real OS.

What we've done is replace almost all of that with Linux and some shell scripts.

LinuxBoot compromises by reusing the early board initialization part of the vendor firmware, and replaces the DXE, BDS, TSL, RT, etc phases of the boot process with a specially built Linux kernel and flexible runtime that acts like a bootloader to find the real kernel for the server and invokes it via [kexec](https://en.wikipedia.org/wiki/kexec). Linux already has code to do PCI bus enumeration and device initialization, so it is unnecessary for UEFI’s DXE phase to do it. It isn't necessary to have a limited scripting language in the BDS to figure out which device to use; we can write a real shell script to do it.

Having Linux in the firmware makes it possible for us to build slightly more secure servers that we can flexibly adapt to our needs and end up with an overall more resilient system.

Security

Reducing attack surface

One of the security improvements comes from greatly reducing the attack surface. In the UEFI DXE phase there was a stack of "device, bus or service drivers" that represents an enormous chunk of code.

One of the security improvements comes from greatly reducing the attack surface. In the UEFI DXE phase there was a stack of "device, bus or service drivers" that represents an enormous chunk of code.

On the Intel S2600 platform there are over 400 modules in this section, with an entire operating systems worth of drivers. One security concern is that it loads option ROMs from devices - you can rewatch my Thunderstrike talk from 31c3 for examples of why this is a bad idea. There are modules to decode JPEG images for display vendor branding during the boot, an area where buffer overflows have been found in the past.

There is an entire network stack, IPv4 and v6, all the way to HTTP. There are drivers for legacy devices like floppy drives, a dusty corner where things like Xen have had bugs in the past. There is something named "MsftOemActivation", who knows what that does. There is even a y2k rollover module that probably has not seem much testing in the past two decades. Getting rid of the DXE phase removes all of these as potential vulnerabilities.

|

|

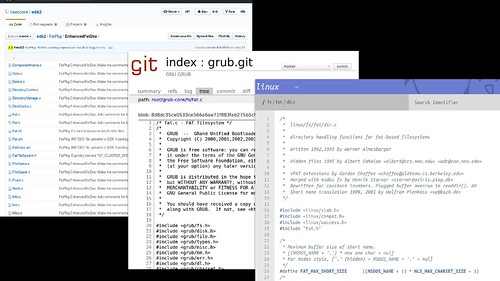

The last phase before the real OS on the UEFI slide was the "final OS bootloader". In most Linux systems this is GNU GRUB, the grand unified boot loader. Many of you are probably familiar with its interface, but did you know that it is nearly an operating system of its own? It has around one thousand source files, including file systems, video and network drivers, and in total it contains around 250k lines of code.

I don’t bring up the size of these things to complain about how much space they take up, but because it affects the attack surface. You might think that having three different operating systems would provide defense in depth, but I would argue that our systems are only as secure as the weakest link in this series of chain loaded operating systems. If a vulnerability is found in UEFI, it can compromise grub and the OS. If a vulnerability is found in grub, it can compromise Linux. If a vulnerability is found in Linux, hopefully it gets fixed promptly.

Weakest Links

|

|

|

|

As we saw, they all three have network device drivers, which make them potentially vulnerable to attacks that can be launched remotely over the network. Linux’s has had significant more real world testing through exposure to the internet at large, so hopefully it is more secure.

We’re also concerned about attacks that might be launched by a local attacker who can plug in a device to the USB. UEFI, grub and Linux all have USB stacks. USB isn’t simple to implement and descriptor overflows have been used in the past to get code execution.

And we’re also worried about attempts to gain persistence or code execution through file system corruption, and since they all three have parsers for FAT this would be a way to do so. Which one has had better fuzzing and protection against corrupt filesystems?

If we’re going to have an operating system in a trusted role in our firmware and bootloader, it makes sense that it should be one that has had far more contributors and real-world testing. UEFi and grub have both had far few developers and commits than Linux, and Linux's filesystems and network stack has had far more exposure to attackers, which is why I think that we should be using it in this role.

If we’re going to have an operating system in a trusted role in our firmware and bootloader, it makes sense that it should be one that has had far more contributors and real-world testing. UEFi and grub have both had far few developers and commits than Linux, and Linux's filesystems and network stack has had far more exposure to attackers, which is why I think that we should be using it in this role.

Why not coreboot?

You might ask, why can't we replace the SEC and PEI phases of the server firmware with coreboot like we do on laptops running Heads? Why make the moral compromise to reuse those pieces of vendor blobs? The issue is that CPU vendors are not documenting the memory controllers and CPU interconnects sufficiently for free versions of the initialization routines to be written.

You might ask, why can't we replace the SEC and PEI phases of the server firmware with coreboot like we do on laptops running Heads? Why make the moral compromise to reuse those pieces of vendor blobs? The issue is that CPU vendors are not documenting the memory controllers and CPU interconnects sufficiently for free versions of the initialization routines to be written.

Instead the firmware vendors are provided binary blobs by the CPU vendors called the Firmware Support Package, the FSP. This opaque executable handles much of the early phase of the boot process and is an irreplaceable part of the firmware, much like the microcode updates that are also opaque and non-free.

Instead the firmware vendors are provided binary blobs by the CPU vendors called the Firmware Support Package, the FSP. This opaque executable handles much of the early phase of the boot process and is an irreplaceable part of the firmware, much like the microcode updates that are also opaque and non-free.

Even on coreboot systems with modern CPUs call into the FSP to do early chipset initialization and on these SOC machines the FSP has grown in scope to include video setup and other parts that on these platforms it is even larger than SEC and PEI.

So reusing SEC and PEI isn't quite as much of a moral compromise as it might seem at first, and on many platforms we can substitute our own PeiCore.pe32 to ensure that measurements would detect any unauthorized changes to that part of the ROM.

One other hurdle to replacing the SEC phase is that modern CPUs validate a signature on an authenticated code module when they come out of reset. This Bootguard ACM measures the first part of the ROM into the TPM, which is good news since an attacker can’t bypass measured boot, but the bad news is that we can’t replace that initial SEC phase. Additionally, the ACM is a small amount of code and we can inspect it through reverse engineering to ensure that it is not doing anything too sneaky.

Flexibility

Configurability

LinuxBoot brings the full capabilities of a real operating system to the boot loader. Instead of limited scripting available in the UEFI shell or the grub menuing system, we can write our system initialization with the powerful tools that Linux provides. Normal shell scripts, just-in-time compiled Go, formally verified Rust, etc are all possible.

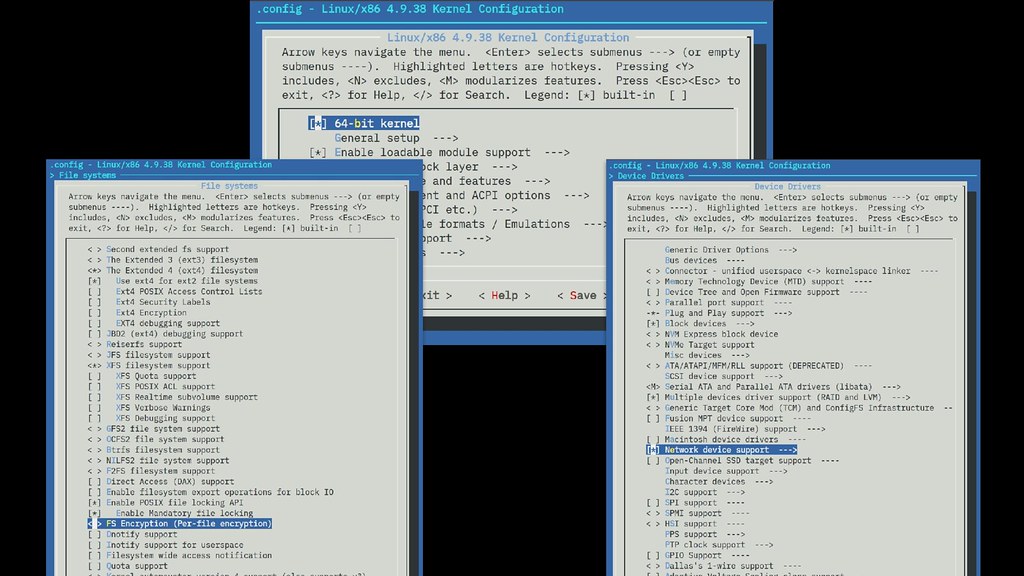

We also have the configurability of Linux. UEFI supports booting from FAT filesystems. LinuxBoot supports whatever Linux supports, including fully encrypted disks if that is what you need. UEFI typically supports the one network card on a server's motherboard. LinuxBoot supports any network card that Linux supports. UEFI is limited to fetching the kernel via PXE and insecure protocols like tftp. LinuxBoot lets you use real network protocols as well as remote attestation. And the device drivers included can be stripped down to the ones that are actually required to boot in order to reduce unnecessary attack surface as well.

Runtimes

LinuxBoot supports different runtimes, sort of like distributions. The Heads runtime that I presented last year at CCC works well with LinuxBoot and is focused on providing a measured, attested, secure environment. Using the TPM for remote attestation on a bare metal cloud server allows you to have some level of faith that the system has not been tampered with by a prior tenant or a rogue system administrator at the cloud provider.

LinuxBoot supports different runtimes, sort of like distributions. The Heads runtime that I presented last year at CCC works well with LinuxBoot and is focused on providing a measured, attested, secure environment. Using the TPM for remote attestation on a bare metal cloud server allows you to have some level of faith that the system has not been tampered with by a prior tenant or a rogue system administrator at the cloud provider.

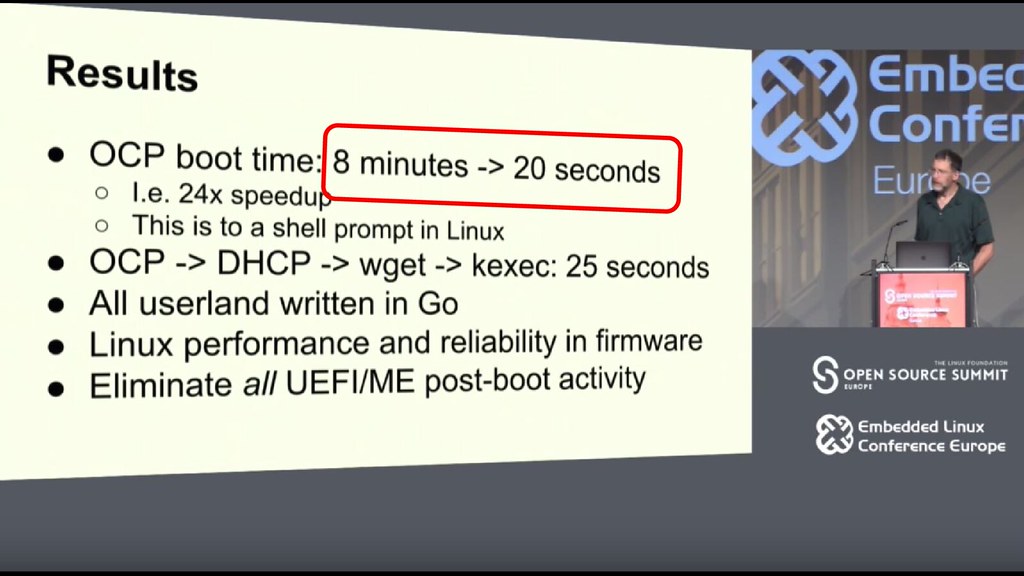

Ron is working with a Go runtime called NERF that replaces the traditional shell scripts with just-in-time compiled Go. He gave a talk about it at the Embedded Linux conference earlier in 2017. Using Go has several advantages over shell scripts: it is memory safe, it is easier to write tests, and it is very popular inside of Google.

Ron is working with a Go runtime called NERF that replaces the traditional shell scripts with just-in-time compiled Go. He gave a talk about it at the Embedded Linux conference earlier in 2017. Using Go has several advantages over shell scripts: it is memory safe, it is easier to write tests, and it is very popular inside of Google.

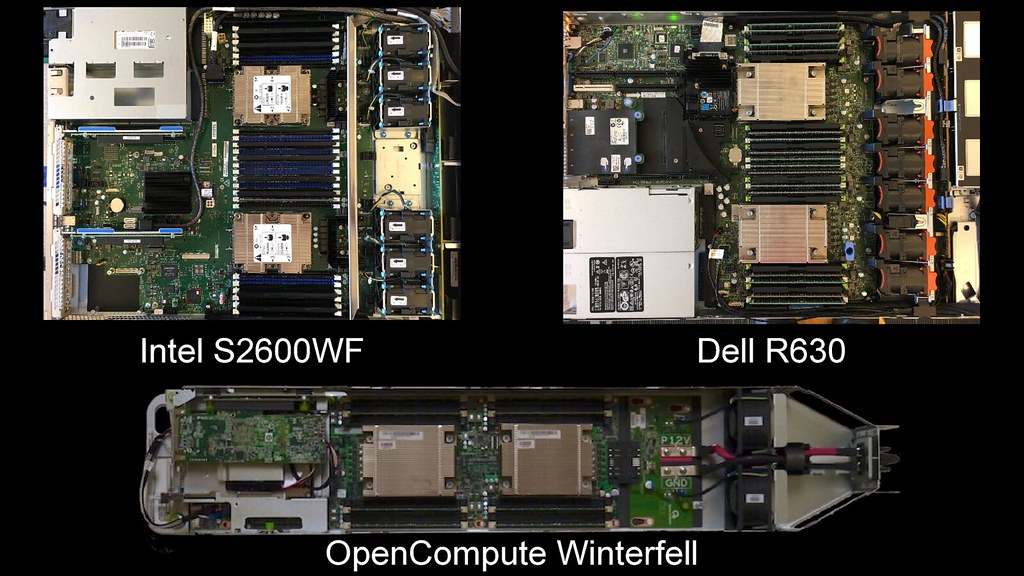

An additional advantage of LinuxBoot is speed -- many server platforms take many minutes to go through all of the UEFI phases and option ROMs, while LinuxBoot is able to get all the way to an interactive shell on the serial console in a few seconds. On the OpenCompute Winterfell nodes this is a reduction from 7 minutes to 20 seconds. I’ve seen similar results with the Intel S2600 mainboard -- here’s a video of it going from power on to the shell on the serial console is about 20 seconds.

An additional advantage of LinuxBoot is speed -- many server platforms take many minutes to go through all of the UEFI phases and option ROMs, while LinuxBoot is able to get all the way to an interactive shell on the serial console in a few seconds. On the OpenCompute Winterfell nodes this is a reduction from 7 minutes to 20 seconds. I’ve seen similar results with the Intel S2600 mainboard -- here’s a video of it going from power on to the shell on the serial console is about 20 seconds.

It scrolled by pretty fast, but one of the interesting bits is that the kernel thinks it is booting on an EFI system with ACPI. This shim layer reduces the complexity of patches required in the Linux kernel and should make it easier to keep the LinuxBoot kernel up to date with security patches.

It scrolled by pretty fast, but one of the interesting bits is that the kernel thinks it is booting on an EFI system with ACPI. This shim layer reduces the complexity of patches required in the Linux kernel and should make it easier to keep the LinuxBoot kernel up to date with security patches.

Resiliency

I'm really glad that Congress has added a resiliency track to focus on how do we improve the situation around security and recovering from attacks. I encourage CCC to also add a track on social resiliency to discuss to discuss making congress safer for all attendees and dealing with both online and offline attacks. (background, lightning talk, CoC issues)

The three principals that I outlined last year in my Heads talk at CCC is that our systems should be open source that we can inspect and modify, they should be reproducibly built so that we can avoid compile time attacks, and they should be measured with some sort of trusted hardware to detect runtime attacks.

Open

Open is key because most systems vendors don’t control their own firmware. They tend to license it from independent BIOS vendors and can only afford to release patches for the most recent models; being able to deploy our own security patches and support our hardware is vital. This "Self-Help" capability allows ensures that older hardware can continue to receive updates, as long as there are motivated community members using those machines.

Open is key because most systems vendors don’t control their own firmware. They tend to license it from independent BIOS vendors and can only afford to release patches for the most recent models; being able to deploy our own security patches and support our hardware is vital. This "Self-Help" capability allows ensures that older hardware can continue to receive updates, as long as there are motivated community members using those machines.

|

|

Systems with closed and obfuscated code can hide bugs for decades, which is especially bad for components like the management engine that are in privileged positions. There shouldn't be any secrets inside of the code for these devices, so keeping them closed only makes the security community very suspicious. Even worse than accidental bugs, occasionally vendors have betrayed our trust entirely and used the firmware to install malware or adware.

Reproducible

Reproducible builds are necessary to know that what we're running is what we have inspected, audited and actually built. Reproducibility makes it possible for everyone building the firmware to produce bit-for-bit identical binaries. This allows verify that the code that we're building is the same as what other people have inspected and built, which protects both against accidental vulnerabilities through compiler bugs or incorrect libraries being included, as well as deliberate attacks on the build tools such as Ken Thompson's "Trusting Trust" hack.

Reproducible builds are necessary to know that what we're running is what we have inspected, audited and actually built. Reproducibility makes it possible for everyone building the firmware to produce bit-for-bit identical binaries. This allows verify that the code that we're building is the same as what other people have inspected and built, which protects both against accidental vulnerabilities through compiler bugs or incorrect libraries being included, as well as deliberate attacks on the build tools such as Ken Thompson's "Trusting Trust" hack.

The Linux kernel and initrd inside of LinuxBoot are currently reproducible, although the vendor components and small shim layer that we reuse are not. While we don't have control of the vendor pieces, we are working on making the edk2 parts that we reuse reproducible.

The Linux kernel and initrd inside of LinuxBoot are currently reproducible, although the vendor components and small shim layer that we reuse are not. While we don't have control of the vendor pieces, we are working on making the edk2 parts that we reuse reproducible.

Measured

Reproducible builds ensure that we what we build at compile time is what we expect, and measuring the firmware and its data into a trusted device at runtime is necessary to be able to ensure that what is running is what we expected.

Reproducible builds ensure that we what we build at compile time is what we expect, and measuring the firmware and its data into a trusted device at runtime is necessary to be able to ensure that what is running is what we expected.

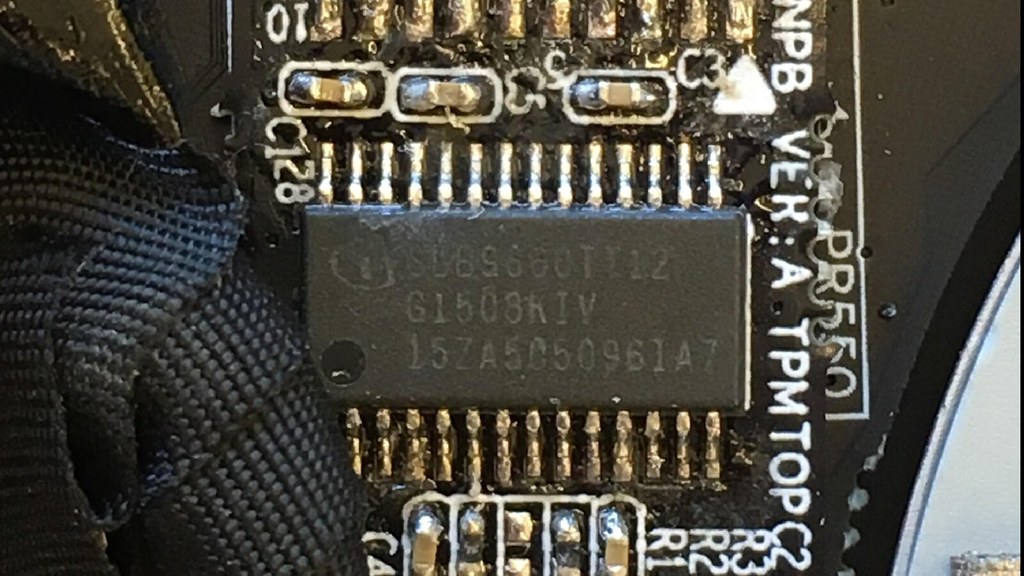

The TPM can be used to perform cryptographic remote attestation as to this runtime state. We're collaborating with the Mass Open Cloud on ways to provide this hardware root of trust in bare metal cloud servers.

The TPM can be used to perform cryptographic remote attestation as to this runtime state. We're collaborating with the Mass Open Cloud on ways to provide this hardware root of trust in bare metal cloud servers.

The TPM isn't invulnerable, as Chris Tarnovsky demonstrated, and Joanna Rutkowska has pointed out that saying something is "trusted" is a bad thing, since that device is now in a position to subvert that trust. However, measuring the system into the TPM is helpful to raise the difficulty in launching an attack. Tarnovsky's attack required decaping the chips to read out the secrets and being able to remotely attest to the firmware, its data and the OS that was loaded gives us a way to have more faith in our cloud systems running LinuxBoot.

The TPM isn't invulnerable, as Chris Tarnovsky demonstrated, and Joanna Rutkowska has pointed out that saying something is "trusted" is a bad thing, since that device is now in a position to subvert that trust. However, measuring the system into the TPM is helpful to raise the difficulty in launching an attack. Tarnovsky's attack required decaping the chips to read out the secrets and being able to remotely attest to the firmware, its data and the OS that was loaded gives us a way to have more faith in our cloud systems running LinuxBoot.

Ongoing research

One area of ongoing research is figuring out how to reduce the vulnerabilities to over-privileged devices like the Intel Management Engine. The efforts of Nicola Corna and the coreboot community on the

One area of ongoing research is figuring out how to reduce the vulnerabilities to over-privileged devices like the Intel Management Engine. The efforts of Nicola Corna and the coreboot community on the me_cleaner seem to work fairly well on the server chipsets, so we're experimenting with how much we can remove from the ME firmware and still have stable systems.

The Board Management Controller (BMC) on server mainboards is another over-privileged component that can intercept keyboard/mouse/video as well as perform DMA. Many of them are based on ancient Linux distributions; I've encountered 2.6 kernels recently on some boards.

The Board Management Controller (BMC) on server mainboards is another over-privileged component that can intercept keyboard/mouse/video as well as perform DMA. Many of them are based on ancient Linux distributions; I've encountered 2.6 kernels recently on some boards.

Through the efforts of Facebook's OpenBMC project, there is already a way to replace the proprietary BMC firmware with our own.

Through the efforts of Facebook's OpenBMC project, there is already a way to replace the proprietary BMC firmware with our own.

Facebook is able to use their influence with the OpenCompute initiative to push this, so the latest OpenCompute CPU, storage and switch nodes ship with OpenBMC as standard.

This is where we want to get to with LinuxBoot as well. Ideally the systems your cloud providers purchase would come with it by default, and on bare metal systems you would have the flexibility to install your own customized runtime. I'm excited to talk with more OEMs about this possibility and hope that Linux in the BIOS becomes a reality again.

This is where we want to get to with LinuxBoot as well. Ideally the systems your cloud providers purchase would come with it by default, and on bare metal systems you would have the flexibility to install your own customized runtime. I'm excited to talk with more OEMs about this possibility and hope that Linux in the BIOS becomes a reality again.

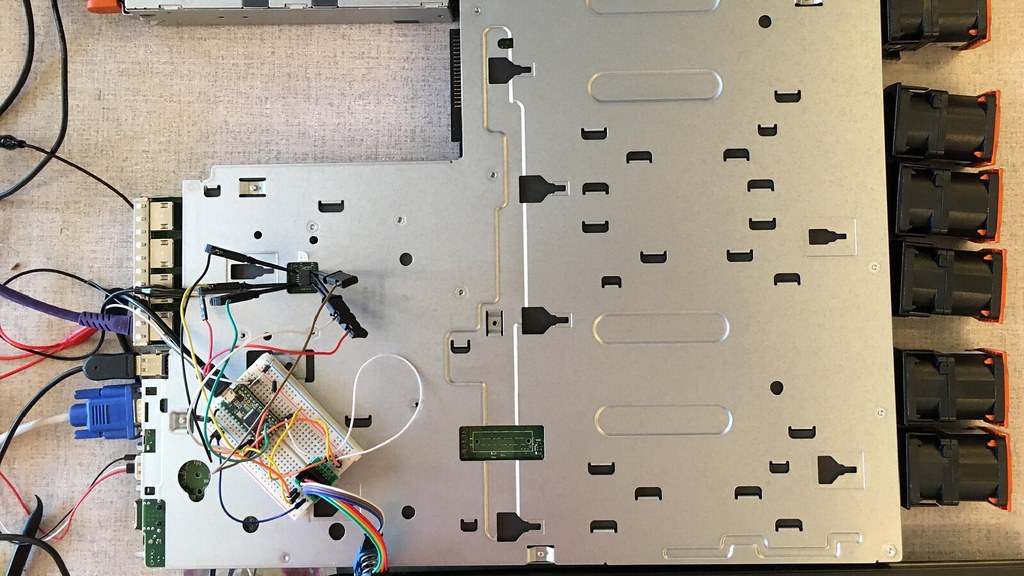

Until then, installing LinuxBoot requires physical access to the SPI flash chip, a hardware programmer and some experience dealing with firmware bringup issues.

If you do want to get involved we're currently supporting three mainboards. The Intel 2600 is a modern WolfPass server board, the Dell R630 is a slightly older and inexpensive system, and Ron and Jean-Marie are working on the OpenCompute Winterfell nodes.

If you do want to get involved we're currently supporting three mainboards. The Intel 2600 is a modern WolfPass server board, the Dell R630 is a slightly older and inexpensive system, and Ron and Jean-Marie are working on the OpenCompute Winterfell nodes.

The LinuxBoot.org website has links to our mailinglist, slack, source trees, etc and we'd enjoy your support in building these more secure, more flexible and more resilient systems.

The LinuxBoot.org website has links to our mailinglist, slack, source trees, etc and we'd enjoy your support in building these more secure, more flexible and more resilient systems.