36c3

The 2019 36th annual Chaos Computer Congress, held at Congress Center Leipzig. Fahrplan (schedule) of talks and videos of all of them. These are my notes from the talks that I attended or watched; some of them are written up and some are in raw form based on what I noted during the talk. My photos from the event are also online.

Highlights:

- bunnie claimed FPGAs are more secure than ASICs against supply chain attacks.

- glitching is a powerful attack against TrustZone, even for "secure" hardware

- ME and PSP researchers have progressed significantly

Open Source is insufficient to solve trust problems in hardware

Presented by bunnie, xobs, Tom Marble. Video and abstract.

Presented by bunnie, xobs, Tom Marble. Video and abstract.

Open source provides tools for transferring trust, through hashing and signing, but these are still subject to "time of check / time of use" (TOCTOU) vulnerabilities because "decoupling the point of check from point of use creates a MITM opportunity". In hardware these vulnerabilities arise because time of check is the wrong way to think about it: place of check matters more.

The supply chain has so many areas for MITM attacks that it becomes very difficult to trust the hardware. Implants can be added at almost any stage along the supply chain, and "you can't hash hardware", so it is very difficult to verify any of the steps. Open Firmware can remove closed source blobs and make some of this a software problem, but you still depend on the hardware to run the verification.

The supply chain has so many areas for MITM attacks that it becomes very difficult to trust the hardware. Implants can be added at almost any stage along the supply chain, and "you can't hash hardware", so it is very difficult to verify any of the steps. Open Firmware can remove closed source blobs and make some of this a software problem, but you still depend on the hardware to run the verification.

Supply chain attacks happen all the time, typically to make a quick buck in profit margin rather than malicious security implants. Counterfeit or mislabeled or rebranded (old versions, wrong die, etc) hardware shows up all the time due to very small margins, 3% is not uncommon. And for some security critical pieces, 3% compromised can translate to horizontal movement that means 100% compromise, which requires near 100% verification of hardware.

Visual inspection of parts or JTAG testing is fairly easy, but doesn't guarantee that the part is what it says that it is. X-Ray inspection is harder and can find some types of attacks. Scanning Electron Microscope (SEM) inspection is really limited to very security critical parts and very challenging to carry out.

Extra wire-bonded die

Adding an extra die ontop of an existing die is really easy in the manufacturing process. Most manufacturers are doing something like this already to combine multiple dies into a single package, so it is not uncommon to see. Top-down xray doesn't show this sort of implant since the solder pad on the bottom of the board is too hard for the xrays to penetrate and provide contrast. Sideways xray inspection can show more, which typically requires removal from the board.

The other problem is that silicon is mostly xray transparent, so the actual die doesn't show up. The wirebonds will be visible, although they can be difficult to distinguish from the wirebonds that are already supposed to be there and an adversary can arrange their wires to match existing ones. CT scans can show the extra package, but again at a much more expensive scanning and typically destructive process.

Launching this sort of attack requires only a few thousand dollars of hardware for the wirebonder and a few tens of thousands to make the dies.

Through Silicon Vias

Many WLCSP packages consist of a silicon die with solder balls directly attached. A TSV implant can sit directly underneath the die with holes that line up with the balls so that the solder passes directly through. This is really hard to detect since the packages look almost exactly like a normal WLCSP.

$100k to design and fab the TSV die, although potentially detectable through physical inspection and disassembly.

Chip design modification

Even if the CPU or ASIC design is open source, it is still possible for the foundry to build implants into it in the RTL netlist, in the hard IP blocks, or in mask modifications. Most customers don't make their own masks - "Customer Owned Tooling" (COT) requires multi-million dollar software and specialized teams, so typically the silicon foundry or service like Socionext handles the place-and-route, IP integration, etc.

The hard IP blocks can attack even COT designs since things like SRAM, efuse, RF, analog parts (PLL/ADC/DAC/bandgap), and the pads tend to be provided by the foundry. These are "trade secrets" held by the foundry and typically specific to their processses, so even COT customer tend to use them. The scope for backdoors in those components is quite wide, so it would be easy to potentially add implants in any of the stages.

Perhaps a COT customer decides to go with a lower density SRAM, lower efficiency ADC, etc of their own design to protect against this. The foundry still can tamper with the masks since there is quite a bit of mask editing and splitting that happens during production. Most masks aren't perfect the first time, so they are "repaired" and edited during the process. The various layers have to be split out, which makes it possible to add dopant tampering that doesn't change the layout, but would affect RF or analog regions (BeckerChes13).

Interdiction

As shown in the photo, there is also lots of attack surface in the parts supply, shipping, PCB assembly, etc. Open source and open hardware doesn't prevent someone from attacking the device in shipment and "you can't hash hardware", so detecting those modifications requires significant effort.

Detection

Ptychographic x-ray imaging builds a 3D model of the entire hardware and is non-destructive, but requires a building-size microscope. And verifying one chip only checks one chip, while one compromised server can compromise the entire infrastructure. We don't use random sample for software signatures, so why do we expect that random sampling for hardware verification would be effective?

Open source can help, especially with open hardware and things like microcode transparency to understand what is going on inside cpu, but it is still not sufficient. bunnie proposed three important properties:

-

complexity is the enemy of verification

-

verify entire systems

- empower users to verify, seal and own their hardware

Betrusted

bunnie's new project, Betrusted, is a mobile device for communication, authentication and wallet functions as sort of a "mobile enclave".

Current enclaves don't handle user IO very well - it is hard to know if you are talking to the real enclave. Additionally, the keyboard can be logged, the video can be tampered with, etc.

They need the entire device to be verified and verifiable, so the design is supposed to be simple.

It is built with a two layer boards to make it easier to inspect, harder to hide things on inner layers.

The physical keyboard has no controller (which means no firmware) and the sharp B&W lcd has drive electronics that can be inspected visually in a non-destructive manner (color LCDs typically have bonded CPUs that are harder to inspect).

bunnie's new project, Betrusted, is a mobile device for communication, authentication and wallet functions as sort of a "mobile enclave".

Current enclaves don't handle user IO very well - it is hard to know if you are talking to the real enclave. Additionally, the keyboard can be logged, the video can be tampered with, etc.

They need the entire device to be verified and verifiable, so the design is supposed to be simple.

It is built with a two layer boards to make it easier to inspect, harder to hide things on inner layers.

The physical keyboard has no controller (which means no firmware) and the sharp B&W lcd has drive electronics that can be inspected visually in a non-destructive manner (color LCDs typically have bonded CPUs that are harder to inspect).

Amazingly they are trying to handle unicode, which is going to require fonts and significant software development.

The CPU is a RISC-V softcore in an FPGA. An off-the-shelf cpu is hard to inspect; typically requires destructive analysis. There are optical fault injection ways to do this, but not yet feasible and too expensive. FPGA allows a user-configured design, which is sort of like ASLR for hardware since it is hard to know what pieces will do what. The totally open source ice40/ecp5 fpga are not feasible for this project since they have worse battery life than the closed source spartan7, especially since the FPGA is already 10x the power draw of an ASCI. The open source prjxray can understand the xilinx bitstream, so they can detect built-time attacks by diffing modifications, although reproducible FPGA builds are not really a thing right now.

Unfortunately the FPGA is closed silicon and it is hard to know what parts of it do. There are special blocks that can introspect the FPGA; turning them off might prevent backdoors, although it is not clear what other weird modes there might be. bunnie argues that since the DoD uses them in their secure applications, they probably don't want Xilinx backdoors either and have looked for them. For external devices they can enable bus encryption and permute data pins, etc.

Security comes from HMAC and AES on the bitstream. Device ships without keys fused; user builds own bitstream and encrypts it as part of taking ownership. The goal is that it would take more than a day for an attacker with physical access to the device to extract the keys (side channels, probing, etc), and to try to make it possible to detect such an attack. bunnie proposes Bi-Pak Epo-Tek 301 epoxy as a way to prevent easy physical attacks (also that glitter nail polish doesn't work).

One of the challenges is to teach end users to verify their own devices. He closed out by pointing out that we don't know what real people are like and that the project needs to work with more end users.

Siemens S7 PLC unconstrained code execution

Ali Abbasi and Tobias Scharnowski. Video and abstract

Ali Abbasi and Tobias Scharnowski. Video and abstract

Siemens + Rockwell have >50% market share of the "programmable logic controller" (PLC). The researchers looked into the S7-1200, an entry level PLC ($250 + power supply) that has a Siemens branded IC. They xrayed + ct of the 4 layer PCB but didn't find anything interesting other than some neat photos. They decap'ed the SoC and found a Rrenesas 811005 ARM Cortex-R4 from 2010. The CPU's bootloader is stored in spi flash (usual m25p40) and there is a nand flash for firmware.

The on-die bootrom does crc32 of spi flash loader, which means that a local attacker can change the firmware without any signatures. They also found that if a special command on the uart is received in the first 0.5 seconds after a boot up, then the bootloader goes into special access feature.

Digging through firmware dumps they found the string "AG ADONIS RTOS" and found employees on LinkedIn who worked on Adonis (as well as PDCFS).

They also found lots of internal components, such as MC7P == internal JIT/VM, OMS == configuration system, MiniWeb sever + OpenSSL, SNMP, NTFS and more.

The chip is configured with some anti-debugging features, such as detecting the CoreSight debugger and bricking the PLC by erasing the NAND firmware.

Some attacks are prevented by enabling CPU features like the mpu, which can prevent some attacks due to eXecute Never bits.

However, firmware image is available by Siemens website (they also were able to dump RAM) and they mapped 84k functions in the 13MB image. ADONIS uses interniche tech tcp and some oem leaked it by accident, which made it easier to map the functions to the real firmware.

Typically firmware updates are uploade to the internal website (miniweb) or Siemens sells a 24 MB sd card for $250. UDP files are LZP3 compressed, ECDSA over SHA256 and validated with a public key in firmware.

The miniweb uses old jquery and implements their own template language (MWSL) which trusts the user, so full off XSS. There are also undocumented http endpoints that they found.

The uart secret knock to enter special mode is MFGT1 (Mit Freundlichen Gruben).

It has lots of handlers that are dispatched based on a binary protocol.

The 0x80 handler write to IRAM locations and the 0x1c handler calls functions from a table stored in IRAM,

which allows a total bypass cryptographic verification of firmware updates.

0x80 is slow, so send short stage that just reads longer payload. Their first example was a tictactoe demo, not quite doom, but fun.

They also suggest that there is fuzzable code in the file system, mc7 parser, and mwsl stuff

Challenges of Protected Virtualization

Janosch Frank and Claudio Imbrenda (IBM). Video

The IBM s390 mainframe has added extra instructions and hardware to implemented protected virtual machines to protect against malicious operators and hypervisors, as well as bugs in the hypervisor. The protected state includes both memory and CPU state and is enforced through a combination of:

-

encryption

-

access protection

- mapping protection

- integrity

They use encrypted boot images to be able to pre-seed vm with secrets (ssh keys, etc), which also requires integrity protection on boot image. There is attestation to prove that the booted image is the one that was intended by the custom.

The threat model assumes that io is insecure and the vm must assume hostile devices, although their hardware virtualization might add protected channels in the future.

There are live migration issues (missed bullet points)

They claim that you can't trust the hypervisor, but that you can trust the ultravisor since it is signed by IBM.

-

hardware/firmware

-

takes over hypervisor tasks

- decrypts/verifies boot image

- protects guests from hypervisor

- proxies interactions between guest and hypervisor

The ultravisor can access all memory, but no other parts of the system can access ultravisor memory. It's not clear what outside connections the ultravisor has. The guest memory can be explicitly shared and used for bounce buffers for IO and IPC.

The hypervisor is still responsible for

- io and device model

- scheduling

- memory management (?)

booting

- guest boot in non-protected mode

- guest loads encrypted blob

- guest reboots into secure mode

-

hypervisor asks ultravisor to setup protected guest

- setups up secure vcpu

- configures guest

- encrypts pages

-

hypervisor schedules guest vcpu

Hypervisor still does some copies for io instruction emulation; to support this the ultravisor rewrites state to only include faulting instruction/registers. This seems complex.

SIDA - secure insutrction data area are small 4k bounce buffers used for io control blocks and also console data. Avoids expensive traps for short operations.

"page fault" only allowed when expected, but how does the ultravisor know?

To implement swap requires assistance of the ultravisor:

- hypervisor asks for page eviction

- ultravisor measures and saves hash

- ultravisor encrypts page, marks as non-secure

- hypervisor writes to disk

Not clear to me: who does this allow dirty/clean tracking?

There was a slide to compare to AMD-SEV and the various extensions like SEV-SNP to add memory write and mapping protection. The claim was that SEC doesn't support swap?

I asked about key management for setup. They have a public key for the machine that I think is signed by IBM and the attestation comes from the ability to decrypt this blob.

A a: swap only includes page and can be integrity protected page at a time migration requires cpu state, as well as entire memory state

q: nested virtualization? a: no

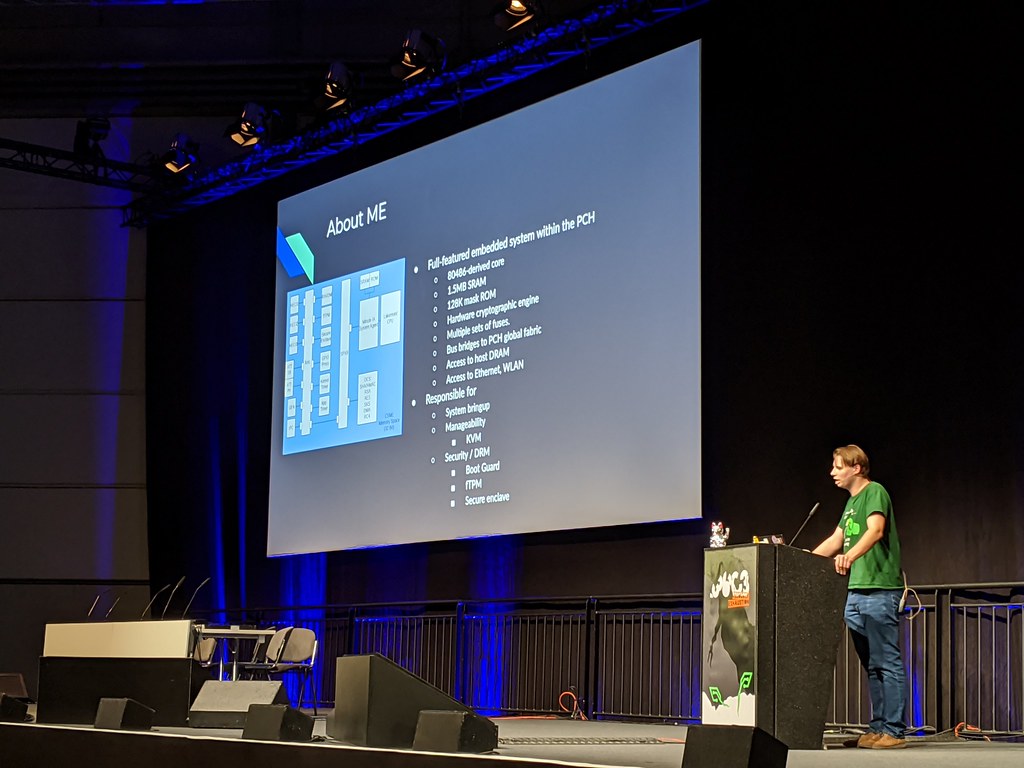

Intel ME Deep Dive

Peter Bosch, video and abstract.

Peter Bosch, video and abstract.

The Intel Management Engine (ME) lives in the Platform Controller Hub (PCH) and handles system bringup as well as boot security features like Bootguard and the software fTPM. This talk covers the ME11 / sunrise point / lewisburg, which contains:

- 80486 derived core

- 1.5MB SRAM

- 128K mask ROM

- access to host DRAM

- access to ethernet, wlan

It will only boot an Intel signed image from the SPI flash and runs an Minix derived microkernel. One of the slides showed assembly dump from Minix with a near 1-1 correspondence with the ME firmware image.

The talk covered previous research by Positive Technologies (Ermolov, Skylarov, Goryachy), some of the newer tools from PT, work by Matrosov, etc. It also had content derived from Intel Firmware Interface Tool (FIT), which has xml files with human readable strings.

The ME firmware is composed of several partitions:

- ftpr/nftp -- read only mounted on /bin

- mfs - read/write config data

- flog - crash log

- utok - unlock token (must be signed by intel)

- romb - rom bypass

Each partition contains files:

- .mod - loadable data/code (flat binary)

- .met - meta data (tag/length/value format) which contains all info to reconstruct memory layout

The ME has one-time-programmable (OTP) fuses that contain intel key hash, which are used to validate an RSA signature on partition manifest, which contains sha256 on partitions, and something that I missed.

Loading the ME fimrware into IDA can be confusing -- it seems to be missing code sections at fixed locations. This is because the firmware is essentially a "shared library", but with no dynamic linker. Those locations are in a jump vector table with fixed address entry points. If you find a ROM bypass version, it provides insight for all versions of that CPU. The code will be different, but those addresses are fixed for CPU versions and some of them are straight from minix code!

The two main shared libraries are syslibmod, which has a hosted libc, crypto, libsrc,and libheci for talking to the host; and the mask ROM on die, which is mapped at base 0x1000 and has a freestanding libc, MMIO and some utilities. There are also initialized data segments that live in the binary flash image and are copied by crt0. The libc include normal stuff including pthreads.

However, very few strings are in the binary. Instead everything goes through SVEN - Intel Software Visible Event Nexus - in which print format strings replaced by message ids and the output goes to trace hub. These messages can be read from debug without UNLOCK and an older version of System Studio 20?? had a database of messages, unfortunately it is no longer present in newer tools.

Since the ME is based on the x86 it has all of the i386 features. Intel uses segmentation for MMIO! wtf. There is another x86 core called the Innovation Engine (IE) which appears to be a cut-n-paste of the ME and is intended for OEMs. Not in wide use, hp might use it?

The ME is connected to the pci fabric as well as the "sideband fabric", a packet switched network with 1-byte addresses. Peter found the spec in a patent and explored the devices out there. The address space is nearly full at this point.

Ermolov had code execution in BUP, which led to jtag exploit and unlock. Peter recreated this and was able to build his ME loader tool, which is like WINE for the ME. It proxies hardware accesses to the real ME and allows debugging of the ME executables on the host CPU. Using this tool he was able to trace through the bootguard config: the ME loads OEM keys from fuses, copies them into the register and could bypass with your own ME firmware. He traced accesses and wrote version that just pokes at registers.

Intel's dci / svt adapters are under NDA, but available on mouser. Peter has been analyzing the protocol and hopes to have a freely available version.

pmic cmx rot has one string?

Regaining control over your AMD CPU

Robert Buhren, Alexander Eichner and Christian Werling. Video.

Robert Buhren, Alexander Eichner and Christian Werling. Video.

arm cortex a5, since 2013 runs "secure OS/kernel", undocumented/proprietary crypto functionality hardware validated boot acts as root of trust runs applications, like SEV protects virtual machines in untrusted physical locations psp is the remote trusted entity even against physical access also provides a TEE (linux kernel patch coming soon) trust the psp for drm summary: psp runs code you don't know and don't control psp loads from flash, then starts the cpu, which loads the bios, which loads the os stored in the padding regions outside of uefi spec psp has its own file format with directory struct each entry has header/body/signature **FET** (Firmware Entry Table), aa55aa55 coreboot had some docs psptool is on github psptrace includes timing numbers (need to add to spispy) each file header says which key to use public key is in _flash_, but hash of key is in _ROM_ four x86 cores in a "core complex" (CCX) two CCX in a "core complex die" (CCD), also memory controller and pcie, *AND PSP* (Naples) each cpu can have multiple CCD CCD0 is the "master psp", coordinates bringup each psp has 256KB SRAM and MMU, split between SVC kernel and USR user "boot rom service page" at 0x3f000 app code/data/stack loaded at 0x15000 mmio from 0x01000000 - 0xffffffff includes x86 memory? DebugUnlock and SecGasket ABL stages initialize and load next stages SEV app is only loaded if requested by OS applications use 76 syscalls (via `SVC` instruction) - smn, dram, smm - ccp ops, psp communication - 18 unknown system management network (SMN) - hidden control network inside CPU - psp/umc/smu/x86/??? are on the network - dedicated address space, but requires mappings - uses memory slots to map smn debug strings sent via `SVC 6`, which is not implemented in production fw with code execution, svc 6 can be registered to do spi write spi flash write accesses are ignored, so it can be used for debug analyzer logs and turns into console messages

replaced sev app with stub that provides arbitrary code exec from x86 used official kernel sev driver to talk to the psp discovered how memory protection slots worked poked at SMN to probe devices built a psp emulator, can forward things to real psp using unicorn can proxy hardware accesses nearly boots sev application need to get code execution... attacker controls SPI flash files have signatures, but directory is not protected can add, remove and change entries can resize entries Q: what happens if amd public key doesn't exist? but only has space for 64 entries! hits amd public key in boot rom service page checks for max of 64 entries, but only on second directory include our own public key and bam control of all user land code on the psp memory is split between kernel and user mode kernel copies bios directory into kernel data space and src and size are of attacker controlled data :facepalm: which will overwrite the kernel page tables make everything user writable aplication can take control of the psp kernel amd has fixed these issues **BUT** there is no rollback protection demoed on epyc napes zen1 believed to exist in ryzen 1st gen not sure about threadripper, rome, etc these attacks require physical access secure boot, tee and sev are all affected also allows further research PSP loads SMU firmware PSP can reach SMM PSP loads UEFI q: why is page table at end of data segment? a: just because, no reason q: flash emulator / toctou? a: not necessary q: boot rom issues? a: can't find the code, maybe not accessible? q: can you make a psp cleaner? a: some stages are required to train DRAM and startup x86 q: microcode interactions? a: none found q: anything evil in the code? a: no (lots of potential) q: size of rsa key? a: 2048, maybe 4096 in newer ones q: rollback protection difficulty? a: probably can't add to older versions, can not update bootrom q: x86 core api bugs? a: (I think they misunderstood the question) q: coreboot? a: can't remove boot rom, so can't be fully open q: something about blocking bsd? not sure what the question was q: enable jtag or other functions? a: first application is about `DebugUnlock`, maybe does it? q: how long did this take? a: begining of 2018 with master thesis q: glitching? a: why?

Inside the Fake Like Factories

philip kreissel, svea eckert, dennis tatang. Video

philip kreissel, svea eckert, dennis tatang. Video

like button is the largest influencer on facebook algorithm most users prefer bad info (number of likes) versus no info not just chinese/pakistan factories also people in berlin, etc -- "PaidLikes" is a germany company that pays ndr did interview on tv with someone created a fake company "Line under a Line" and paid for 200 likes then messaged all of them to talk about who they worked for 30k users, have to use real account, 2-6 cents/click 2+ platforms power clickers can earn up to 450 euros/month bad opsec - campaign has small unique id with small int scan the space, fetched 90k logged redirects analysis... AfD and other political parties. mostly local parties. most said they wouldn't do it but one politician agreed to interview with off the wall response: "it's only fraud if we as a society say that is the measure of popularity" facebook says they remove fake likes, temporarily blocked paidlikes (after 7 years!) facebook also says they remove fake accounts before users interact with them but maybe not nato tested: 300 euros == 3.5k comments, 25.7k likes, 20k views, 5.1k followers vice germany found hacked iot devices "your fridge is supporting the other candidate" garden furniture store in germany with 1e6 likes but all likes come from other character sets facebook userid is also incrementing since 2009 currently 40 billion users? can use id to estimate account age different services have very different ages can sample facebook ids to see which accounts are still active random sample of 1 million from 40 billion, check if they exist over a year facebook tried to block them via ip + captcha, used tor to bypass Q: did they use the facebook onion? zuck is user 4 (keynote timed out!) only 1/4 is valid == ~10 billion registered users more than humans > 13 2 billion new accounts since oct 2018 and dec 2019 facebook deleted 150 million over similar time frame facebook claims to have deleted 7 billion new accounts? could sites scale based on hyperactive users? divide likes per day which would lead to fake accounts to spread load between accounts. paid likes has more knowledge about users than facebook... (they collect passports, etc)

How to design highly reliable electronics

thasti and szymon. Video

za 28 dec 2019 11:31:57 CET

cosmic background radiation

one bit flip every 36 hours

avionics failures

presenters develop rad hard electronics at cern

tmrg.web.cern.ch/tmrg/ccc

rad environments

- space (van allen belts)

- _juno radiation vault_

* xray (medical, high energy)

need to protect both combinatorial and sequential logic

- cmos tech implements both with n and p mos transistors

- dimensions of gate can be tens of nm

- very low energy required to disrupt

effects are both cumulative and single event

- cumulative:

- displament (fluence): changes structure of circuit, not significant in cmos

- ionization (TID): adds electron, changes state of ion

- can cause slower transitions

- mitigate via shielding: works well in space, but not if you want to measure radiation at cern

- mitigate via transistor design: change dimensions to withstand dosages, some special layouts are better

- mitigate via modeling: characterize the failures, over-design the maximum frequency

- single event:

- caused by high energy particles, can happen any time

- hard errors (permanent): burnout, gate rupture, latchup

- soft errors: transient (in combinatorial) and static (latched into state)

high energy particle adds +/- pairs, ions will sort, cause spike

- can cause bit flip in a memory cell (sram flipflop)

- SEE can be modeled in spice as a current injection (on both sides, for a 0->1 and 1->0)

- SEU in cm/2 dependson physical layout ("collection electrode" size)

- as supply voltage and node capacitance goes down: critical energy goes down (less charge needed)

- as physical dimension goes down: SEU goes down (more charge needed)

- adding size increases SEU, but adds capacitance

- adding metal increases capacitance, but slows max freq

DICE:

- storing same bit in more nodes doesn't help, since error will propagate through loop

- dual interlock cell design can prevent propagation across nodes (at increase in area and write costs)

- and small DICE can be hit by a single particle

- double DICE reduces by two order of magnitudes, move things around

Correction

- hamming or reed-solomon

- hamming:

- 4 data bits + 3 protection: protect and correct single bits

- some more effecient ways

- triple modular redunancy (TMR)

- voting

- can introduce its own errors

- could corrupt all three registers

- clock skew ensures that voter output will only corrupt on flip flop

- what if we triplicate the voters?

- protects against most things, but at 3x area, power, etc

- scrubbing

- continuous check and refresh

- watchdog

- reboot if things go bad

TMRG takes verilog and automates triplications

- modern tools try to simplify logic, which removes duplication!

- modern cells are so small that single particle can mess up triplicates, so manual placing helps

- functional/physical verification with fault injection in simulation

- yosys to parse rtl and python verification framework

physical testing with xray

- measure frequency degredation

SEE testing with heavy ions

- requires vacuum

- expensive!

- localized, random effects

- hard to debug why

SEE with lasers

- single photon

- large energy into single transistor

- mostly destructively test

- two photon

- cause small effect where they intersect

- xyz control: can scan across chip until things go weird

- can trace back to netlist

lessons learned:

- reset requires buffer and can have transient events, reset all registers

- tried triplicate reset, but shared pin with single buffer...

- verified verilog, but voter error caused bit flips. synthesis tools had identified redundant logic, removed extra voters

Trust Zone M(eh) - Breaking ARMv8-M security

Thomas Roth. Video

Thomas Roth. Video

za 28 dec 2019 14:13:35 CET

splits into two domains using the memory protection unit (MPU)

android uses it for key store

companies are trying to use them for hardware wallets, automotive keys, etc

"secure chip" requires a threat model

software attacks

hardware attacks

- debug ports

- side channel

- fault injection

vendor details are often lacking in threat model

fault injtion is a local attack

memory partitoining into S and NS

code partitioned into Secure, Non-Secure Callable, NonSecure

"attribution units" - identifies security state of an address

the highest attribution from the multiple units is used

which means that if you can break one, you can escallate

SAU is an arm standard, on almost all systems

IDAU (implementation defined) might be custom

voltage glitching can skip instructions, corrupt reads/writes, etc

removing external filter caps give direct access to the VccCore

supply vcore directly to keep things stable

mark eleven: ice40 glitching thingy

icebreaker + chipwhisper + glitching

sam L11 is "very secure"

- shielding

- brown out detection

- but not hardened according to microchip

- does not have SAU, only has IDAU configured by boot ROM

- called "fuses", but writable

- registers/fuses control paritioning

glitching the boot rom that loads the idau

- too easy, spent time figuring out if the glitch was actually working

can't dump boot rom, didn't find code and don't know when idau is configured

want to glitch AS register which would make entire region non-secure

solution: brute force, too slow

solution: power analysis (riscure watched power traces on jtag to determine when it is turned off)

compared power when idau was enabled versus not

easily found region when it was different with chipwhisperer

simple python for loop found glitch point and length in a few seconds

with a $60 set of parts

attiny85 can do it for $3...

sam l11 kph ships with "Kinibi-M" TEE, customer can only write non-secure code

good test since it is supposed to be configured correctly

designed attack board with no filter caps

used openocd to read from trust zone

good for supply chain attacks: compromise TEE on parts

hack digikey to get invoice access, reroute delivery and swap the parts out

Q: decap versus glitch? which is easier/harder pull off?

next up: Nuvoton NuMicro M2351

Cortex M23

SAU + IDAU

claims to be resistant

boot rom code is available?

BLXNS - branch linked and exchange to non-secure

jumping from secure into non-secure requires explicit instruction

uses lowest bit like thumb

does a bitclr before the instruction

needs two glitches, one for sau->ctrl and one for bitclear

works, but not stable

another attack on the RBAR write, which leaves the entire bottom half of the mirrored flash in non-secure mode

RBAR is supposed to undefined, but always zero

next chip: nxp lpc55s69

coretex m33

dual glitch and rbar both work

however, `MISC_CTRL_REG` aka "Secure control register" is documented somewhere

enables secure checking

checks if AU security state matches MPC security state

requires multiple glitches to bypass

vendor is fixing docs

conclusion: this is only insecure against a very specific attack

@stacksmashing

q: stm32f4?

a: wallet.fail attacked a similar one. "firewall" is like trustzone

q: replicated on multicore?

All wireless stacks are broken

jiska. Video

jiska. Video

za 28 dec 2019 17:14:43 CET near field is not really near - can forward, relay and modify messages - students asked to stop testing - bheu talk on visa bluetooth is a big surface - encryption key stored on the device - trusted communication based on key, which breaks android smart lock - can escalate into other components - `hci_reset` doesn't always reset, allowing persistence - airplane might work - rebooting phone might work frankenstein fuzzer emulates bluetooth stack - looked at early connection state - no conection info, faster for attacks - found heap overfows - iphone 11 added bt 5.1 - bricked eval board... escallation within a chip - shared antennas (2.4ghz for bt + wifi) - "proprietary deluxe!" - iphone reboots - broadcom is trying to fix it... blueborne - since 2017, but likely to reappear - "web bluetooth" linux bluetooth stack - 23% from one committer apple bluetooth stack - so many impementations android bluetooth - all attacks start here... - lots of students - nobody wanted to work on windows lte - "Hexagon DSP" is awful - simjacker - ltefuzz (not yet discloed due to disruptive findings) threadx: we know, not our problem samsung: only with nda broadcom: only with nda, also doesn't issue cve at least they weren't dealing with Intel...

System Transparency

Forgot to take notes - basically open firmware is good, attestation of the entire boot chain through the OS and the services running provides better trust to the end users. Mulvad blog post goes into details too.

Hacking with a TPM

Andreas Fuchs. Video

Andreas Fuchs. Video

zo 29 dec 2019 21:53:32 CET andreas fuchs works for frauhofer-sit, sponsord by inineon tpms only are installed due to microsoft rms says "tpm are not dangerous" my photos from wikipedia tpm == proof of posession (similar to having a yubikey that lives in a usb slot) tpm forwarded through to virtual machine Q: slide 11: tpm2tss-genkey == any secrets in that file? `curl --insecure` == client cert + tls is still actuall secure curl can talk to tpm for client side auth can use tpm for automating bash scripts without having passwords in scripts can also use tpm to auth ssh public keys and wrap it with git `GIT_SSH` var controls which ssh will be used q: tpm pin caching? q: tpm + user agent? q: can tpm sign commits? luks2 now uses json headers can use a type:tpm2 with tpm2nv/nvindex and pcr values special cryptsetup branch has to install on luks2 (cryptsetup convert) q: slide 14: which pcrs are used? tpm2-totp gui to select pcr kernel/initrd are in pcr? q: trusted grub? resource exhaustion: `tpm2_flushcontext -t / -l / -s` q: tpm proof of presence? a: not right now, maybe with some sort of led access

HAL - The open source hardware analyzer

Max Hoffmann. Video

Max Hoffmann. Video

ma 30 dec 2019 11:29:30 CET

max hoffman

"trust me, I'm an engineer"

engineering is turning an high level idea into a thing

reverse engineering is turning a thing back into an high level description

lots of reasons: fix bugs, find trojans, search for ip

asic

- "classic integrated circuit"

- specific purpose

fpga

- internals are fixed, but general purpose

- reprogamable hardware

both programmed in HDL

cpus have specific instruction set

hw has gate libraries

fpga == programable elements

asic == transistors

sw is "compiled"

hw is "synthesized": synthesis, placement, routing

output of synthesis is "netlist", similar to assembly output

fpga turn netlist into bitsream, which maps elements to lut and routing

asic has to map to layers, "layout"

- typically logic on lowest layer ("cmos")

- upper layers for routing ("metal")

- interconnect layers with vias

fab turns layout into mask into chip

reverse engineering

- extraction of firmware, analysis of assembly or decompilation

- for fpga, bitstream is typically in spi flash, extraction is easy, and directly maps to netlist

- for asic, have to extract die from package ("decapsulation"), and then photo each layer ("imaging") and "delayering"

- optics are too coarse to see features any more

- SEM for more modern things, with thousands of images for each layer

- stitch and align and process all these images to get netlist

- costly process, with specialized companies for each step

- gate libraries are proprietary, but can be reverse engineered on the fly with pattern matching

- netlist is not an easy way to reason about code

how to reverse engineer a netlist?

Q: yosys bistream to verilog?

much research is not reproducible, poorly documented, crashes on realworld issues

bochum research university: HAL - gui for netlist

turn netlist into graph, apply graph algorithms

with plugins

turn parts of graph into modules

hal on obfuscated fsm hardware (ches 2018)

harpoon adds extra dummy states and transitions

hal recover state machine

- state machine has self-updating state

- storage elements influence each other

- state transition logic -> state memory -> logic

- "strongly connected component"

- can ignore output logic

- can find initial state from reset logic or initial bitstream

- add brute force to find all states reachable from fsm

- demo: "not that easy in reality"

haifa paper: scandid "scan based reverse engineering"

- recovering netlist from scan chains

- scan chains for debugging and reverse engineering

- jtag shift in state

- run for one clock

- jtag shift out state

- attack is change one bit of input, see which bits change in output

- right choices of input changes allow recovery of boolean functions

- approximate of netlist

hal scandid plugin

- can run with real jtag or simulation

- finds flipflops and "LOB"

- not perfect: approximation of real netlist and LOB can be complex

hal still requires lots of manual intervention and thought

- some work on "cognitive obfuscation" to make it hard for people

- research into what is hard to do for both machines and people

Understanding millions of gates

Kitty. video

Kitty. video

ma 30 dec 2019 12:50:24 CET

kitty

looking at the gap between rtl and packaging

(foundary ip, fab)

companies are concerned about foundaries stealing designs

or out of spec chips entering market

users are concerned about ip vedors, design teams, foundry, etc

decap, delay and image are "solved" - pay service

image processing, gate library, etc are "solved" - mostly just work

netlist reverse engineering is harder

- partition into modules

- identify modules

- too many gates for most guis

- mostly looking at known designs to understand new ones

partition methods:

- try to find "words" (collections of bits that are acted upon together)

- try to see where words move between modules

- AES s-box can be seen visually?

identification:

- set of known netlists: opencores, design libraries, etc

- graph isomorphism problem?

- need perfect netlist extraction, need perfect partitioning, etc

- fuzzy matching based on visualization

- depends on optimization levels, cell libraries, etc

hierarchy and function identification

- three counter measures

- logic locking

- split manufacturing

- ?

logic locking:

- add key inputs

- extra XOR layers in logic, must provide proper inputs

- RLL, FLL, SLL, SAT reistant, ...

- identifiable by XNOR/XOR gates, typically found and can be removed

- also doesn't change structure, still visually similar

- also requires secure key storage somewhere

- and messes up verification teams

fsm locking

- blackhole states

- states with no exits

- visually identifiable

- keys can be deduced

split manufacturing

- "DOD trusted foundary"

- do 8nm elsewhere, 45nm locally

- however,....

- physical proximity leaks info: wires don't go that far

- not many loops

- load capacitance constrains fan outs and provides info

- "dangling wires" can reveal info

cell camouflaging

- use same metal layer for NAND and NOR

- different dopants for wiring

- large area overhead

- brute force to decamouflaging

- FIB/SEM can tell some dopants apart

most countermeasures are broken

- foundaries can tell what is going on

- foundaries can still add various backdoors

- no tools / no formal methods / no one cares

development doesn't require hardware

- yosys + software analysis

- graph logic needed

q: how do you visually huge graphs?

a: graphvis, "giphy", graphtool?

q: seeing chip running in SEM, you can tell what is happening?

a: really small tech is hard enough to image, much less operate

q: anti tamper coatings?

a: physical reverse engineers don't seem to have had any problems

q: recovering clock groups from netlists?

a: first step is always find clock tree, initial partition across clock domains

q: does anyone use obfuscation and what's the overhead?

a: some chips shipped with logic locking, but not 100% key gates, typically only in crypto or other areas

camoflag can add 1.5 - 2x overhead

q: challenges of 3d stacking?

a: not her problem. physical research is working on it.

q: fpgas?

a: clustering works well, logic locking less, camoflaug not at all

q: real world?

a: hasn't seen it, would require large team at foundary to pull it off

big companies are reverse engineering their own parts to check foundary

in the us the research is funded by darpa.

Hackers and makers changing music technology

Helen Leigh. Video

Helen Leigh. Video

ma 30 dec 2019 14:28:46 CET helen leigh "crafty kids guide to diy electronics" what an instrument sounds like can be less important than what it looks like "no noise, only sound" 440 Hz A is a modern invention magnetic tape recorder popularized by nazis? replaced gramaphones for music distribution "music concrete" in Paris (now on youtube!) play tape like a musical bow cut and flip reverse attack and decay sampling has become ordinary, but came from these experiments "tomorrow never knows", not played for copyright issues daphene orama musician + physics + electronics worked at bbc queuing reel to reel tape recordes met "music concrete" while in paris made "electronic music" by recording oscilloscope signals after seven years BBC gave her a studio, radiophonic workshop left to start own practice and build synths watercolor based music synth? dealy darbershire masters of music, intern at radiophonic workshop designed dr who theme without multitrack tech also a soundtrack for asimov story, which has been lost modern music tech ridiculous inventions are everywhere cheap microcontrollers, easy pcb, easy synths easy collaboration with niche communities online machines room hackerspace in london closed due to rent increases furby synth circuit sculpture cap touch makes for fun musical instruments "utopian scifi creatures" traditional functionality / untraditional form purring tentacle with machine embroided conductive thread npr121 sensor with more pins bela boards pocket beagle based running pure data imogen heap collaboration mimu glove: $5k based on cheap microbit from bbc $10 martine nicole bishi q: non-human music? a: yes, but likes tactile interactions craft store with a multimeter to find new materials

Infrastructure Review

ma 30 dec 2019 16:16:22 CET 300 G uplink juniper mx960/480/204 and qfx10002 bgp38 ingress filtering to prevent spoofing 2500 tables, 300 switches bring your own 10g uplink 300 access points aruba wifi controllers (6x active-active) redundancy caused packet loss pixelflut caused broadcast storm zmap wifi scan, had to nullroute source only used 20% uplink 11k wifi clients 86% clients only 11 802.11ax clients 2.4 ghz is over Q: but what about esp? reuse switches as cheese boards still use 11 tons of CO2 bought carbon offsets internal grafana database varnish/nginx front end netbox service discovery ## phone arrive day -6, planned had to be online day -2 deployed 67 antennas seamless handover hotel and station station installations including hbf! super fast charging 5k phones some DOS attacks on system reverse engineering manufacturer database ids from registrations ## gsm osmocom open5gs all eu spectrum has been sold, so license is hard no 2g eu band available 850 mhz for both 2g and 3g telefonica provided lte 56 sim cards at 31c3, 60 at 32c3, 700 at 36c3 manufacturing sim cards is hard? crashed osmomsc via invalid mobile identity attack custom code to support old 2g phones ## post 3k external postcards (to 42 countries in the "default world") 35k internal postcards (!!!) bidirectional service singing telegrams random deliveries "laugh letters" + "scented postcards" uv pens: don't use for address scratch off stickers 30 postcard designs perfume was too strong 130 letters for activists in prison ## video 10 stages, 330 talks, 255 hours stream re-encoding vaapi hardware 45W laptop replaced 4 xeon servers better profile for slides as well setup machines by hand, caused screwups. replacing deployment with ansible ran out of disk space on some systems voctomix: video editor with transitions/insertions/mix/sync "party mode" ## stage management 36 heralds, 70 stage managers 1 stage fright counsel, 6 incidents ## power power factor 0.12 - 0.22! in one hall "use more ohmic devices!" lounge power usage is correlated with music 120 portable radios, new firmware programmer is too slow 2 dead repeaters from camp programming pc dead on arrival ## sustainability two water dispensers for angels 8 volunteers give-and-take electronics ten boxes near sticker areas organic bins 4 locations, needed more volunteers recycling cigarette butts maybe worked? ## assemblies 419 assemblies 35k m^2 3k tables 6k chairs c3 Meubelhuis: ikea delivered and removed tables ## c3lingo 15k minutes translated 100% en/de 2/3 into two languages "swabian"? first year for RU and PL teams ## subtitles first pass through speech recognition human corrects auto-aligns human review release 144 angels 433 hours of work for 126 hours of material ## LOC/health-safety no work related accidents that caused harm

boot2root

Ilja van Sprundel and Joseph Tartaro Video

re-watch

ioactive

lots of attack surface in many bootloaders

nvram

bitmap parser

ipxe

dhcp

tftp

ping

802.11

bluetooth

grub usb parser

tianocore usb

usb descriptor double fetch

- also used against nintendo switch

- iphone checkm8 dfu mode

lots of user controlled data everywhere

spi flash should not be trusted

tpm should not be trusted

- send less data than expected, causes seabios integer underflow

smm attack surface

- uefi has largely fixed it

- 3rd party integrations often broken

- coreboot has some issues ("TODO" range check comment)

dma

- most platforms aren't using them, so any use is better than none

- uefi can enable iommu, but doesn't hand off correctly

- disables iommu during handoff!

- iommu likely have bugs

hardware mostly out of scope

- glitching, fault injection, etc

- chip security mostly requires sophisticated attacker

code integrity is hard

- revocation is not well implemented

- and CRL lists can be exhausted

- sometimes parts are not covered in signatures

- sometimes only signature existance is checked

q: best way to build laptop? coreboot+linux, but what about devices?

a: iommu can help prevent issues

q: what about bootrom quality?

a: many concerns. also underestimate the power of reverse engineering

vendors should move to more open auditing

q: what about otp bootroms?

a: maybe, but hard to know what else is on the die

q: what's a good bootloader fuzzer?

a: isolate filesystem libraries, use afl.

network fuzzing, dns fuzzing

test harness is the best way to spread load

q: what about better languages?

a: yes, but still have to be concerned.

go on the bare metal arm

only fixes most memory corruption bugs, but not iommu or other hardware bugs.

The KGB hack revisisted

rewatch... video

1989 was still divided germany howto internet in 1989: network access via acoustic coupler and local post office network data exchange packet based (x.25 based) local calls were cheap in west berlin, so it was a hacker's paradise network user id (NUI) was expensive lots of theft of NUI ccc was founded in this era use corporate computers was seen as ok mostly avoided military sites tv went to congress to talk about wunderkind to be more famous, one member wanted to hack government computers pengo went to soviet mission in israel and said he wanted to hack "sergej" at embassy gave export CoCom shopping list made 90k marks, until a sys admin in California noticed something Clifford Stoll saw $0.75 missing in accounting deleted unknown user got warrant to trace line attacker was coming through datexp in hannover but hanover had old switches without tracability hacker was only using stoll's machine for short hops stoll created fake SDI files to delay hacker including physical mailing address for more details germany post was able to trace back to CCC april 1988 "Quick" publishes report may 1988 stoll publishes "stalking the wily hacker" july 1988: hagbard+pengo seek legal help march 1989: five arrests, some from CCC someone requested details over mail, not sure how they found about it one of the ccc members committed suicide (with conspiracy theories around it) the issue tainted the image of CCC russian hackers are still in the news concerns about diverting from technical origins started in 1988 club and congress *has* changed - kids at congress - more women now, wider cross section q: nl hackers work more with gov't? q:

Plundervolt

dynamic voltage and frequency scaling - used by gamers - cloud servers use it for reduce costs - thermal management clockscrew used timing scaling to attack trustzone - clock speeds are shared between trust domains - glitching via software voltjockey used voltage scaling to attack trustzone undervolting prevents thermal throttling - tang's phd thesis shows how to do it on intel msr 0x150 attempt to detect failures in loop as undervolting increases, bit flips occur in math caused lots of crashes, had to find value where total collapse didn't happen "given a cool name, reseearchers will be able to find a new vulnerability to match it in finite time" - rowhammer in tv show rowhammer details sgx details - integrity protection on ram - but dvfs causes cache or register flips